Text generation is the process of generating new text sequence based on a given input of text. It can be achieved via several methods. In this tutorial we will explore how to generate text using three methods namely

- Markov Chains

- Machine Learning

- Deep Learning

Text Generation using Markov Chains

We can use a Markov Chain to generate new text. In order to do so you will have to split the source text into individual words, and then build a dictionary called chain that maps each word to a list of possible next words.

Next, we randomly select a starting word from the source text, and then use the Markov Chain to generate the specified number of words of new text. To do this, we repeatedly choose a random next word from the list of possible next words for the current word, and then add that word to the output text. We then update the current word to be the word we just added, and repeat the process until we’ve generated the desired amount of text.

Finally, we join the generated words back into a single string using the join() method, and return the resulting string.

import random

def generate_text(text, length):

words = text.split()

chain = {}

prev_word = words[0]

for word in words[1:]:

if prev_word not in chain:

chain[prev_word] = []

chain[prev_word].append(word)

prev_word = word

word = random.choice(words)

output = [word]

for i in range(length):

if word not in chain:

word = random.choice(words)

next_word = random.choice(chain[word])

output.append(next_word)

word = next_word

return " ".join(output)This can further be optimised by using a default dict and a higher order Markov chain that takes into account multiple previous words.

The reasons for the above options involves

Use a default dictionary: Instead of checking if a key exists in the dictionary before appending a new value, we can use the collections.defaultdict class to create a dictionary that automatically initializes new keys with an empty list:

from collections import defaultdict

def generate_text(text, length):

words = text.split()

chain = defaultdict(list)

prev_word = words[0]

for word in words[1:]:

chain[prev_word].append(word)

prev_word = word

...

Choose starting word more intelligently: Instead of randomly selecting a starting word, we can choose a word that appears at the beginning of a sentence, since these are more likely to be good starting points for a coherent sentence:

def generate_text(text, length):

sentences = text.split('.')

sentence_starts = [s.strip().split()[0] for s in sentences if len(s.strip()) > 0]

words = text.split()

chain = defaultdict(list)

for i in range(1, len(words)):

chain[words[i-1]].append(words[i])

prev_word = random.choice(sentence_starts)

...

Use higher-order Markov Chains: Instead of only considering the previous word when generating the next word, we can use a higher-order Markov Chain that takes into account multiple previous words. This can result in more coherent and meaningful generated text:

def generate_text(text, length, order=2):

words = text.split()

chain = defaultdict(list)

for i in range(order, len(words)):

prev_words = tuple(words[i-order:i])

chain[prev_words].append(words[i])

start_idxs = [i for i in range(len(words)) if words[i][0].isupper()]

if len(start_idxs) == 0:

start_idxs = [0]

start_idx = random.choice(start_idxs)

prev_words = tuple(words[start_idx:start_idx+order])

output = list(prev_words)

for i in range(length):

if prev_words not in chain:

prev_words = tuple(words[start_idx:start_idx+order])

word = random.choice(chain[prev_words])

output.append(word)

prev_words = tuple(output[-order:])

return " ".join(output)

In this optimized version of the function, we use a parameter called order to specify the order of the Markov Chain (i.e., the number of previous words to consider when generating the next word). We also choose a starting word more intelligently by selecting a random word that appears at the beginning of a sentence, and we continue generating text by using the most recent order words as the key in the Markov Chain dictionary. This can result in more meaningful and coherent generated text, but it may require a larger source text corpus to work effectively.

We can convert all of this into a class for easy reusability in a package or a library

import random

class MarkovChainGenerator:

def __init__(self, order=1):

self.order = order

self.markov_dict = {}

self.start_words = []

self.end_words = []

def _add_to_dict(self, words_list):

for i in range(len(words_list) - self.order):

key = tuple(words_list[i:i+self.order])

value = words_list[i+self.order]

if key not in self.markov_dict:

self.markov_dict[key] = []

self.markov_dict[key].append(value)

self.start_words.append(tuple(words_list[:self.order]))

self.end_words.append(words_list[-1])

def add_text(self, text):

words_list = text.split()

self._add_to_dict(words_list)

def add_corpus(self, corpus):

for text in corpus:

self.add_text(text)

def generate_text(self, num_words=100):

current_key = random.choice(self.start_words)

text = list(current_key)

while len(text) < num_words or text[-1] not in self.end_words:

if current_key not in self.markov_dict:

current_key = random.choice(self.start_words)

next_word = random.choice(self.markov_dict[current_key])

text.append(next_word)

current_key = tuple(text[-self.order:])

return " ".join(text)

In this implementation, the MarkovChainGenerator class has four methods:

__init__: initializes the object with a specifiedorder(which defaults to 1), an empty dictionary to hold the Markov chain data, and empty lists to hold the start and end words of each text added to the generator._add_to_dict: a private helper method that takes a list of words, generates the corresponding Markov chain data, and adds it to the dictionary.add_text: takes a string of text, splits it into words, and adds it to the Markov chain data.add_corpus: takes a list of strings (a corpus), and adds each string to the Markov chain data usingadd_text.generate_text: takes an optionalnum_wordsargument (defaulting to 100), and generates a new text using the Markov chain data.

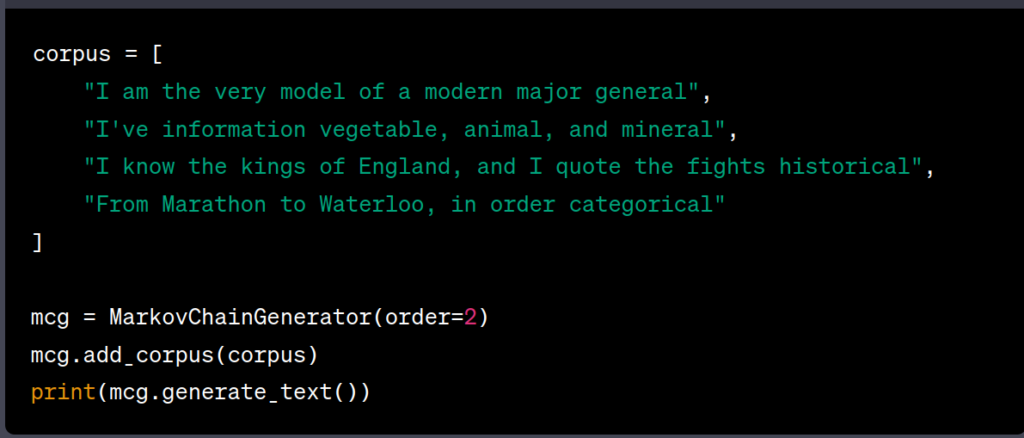

corpus = [

"I am the very model of a modern major general",

"I've information vegetable, animal, and mineral",

"I know the kings of England, and I quote the fights historical",

"From Marathon to Waterloo, in order categorical"

]

mcg = MarkovChainGenerator(order=2)

mcg.add_corpus(corpus)

print(mcg.generate_text())

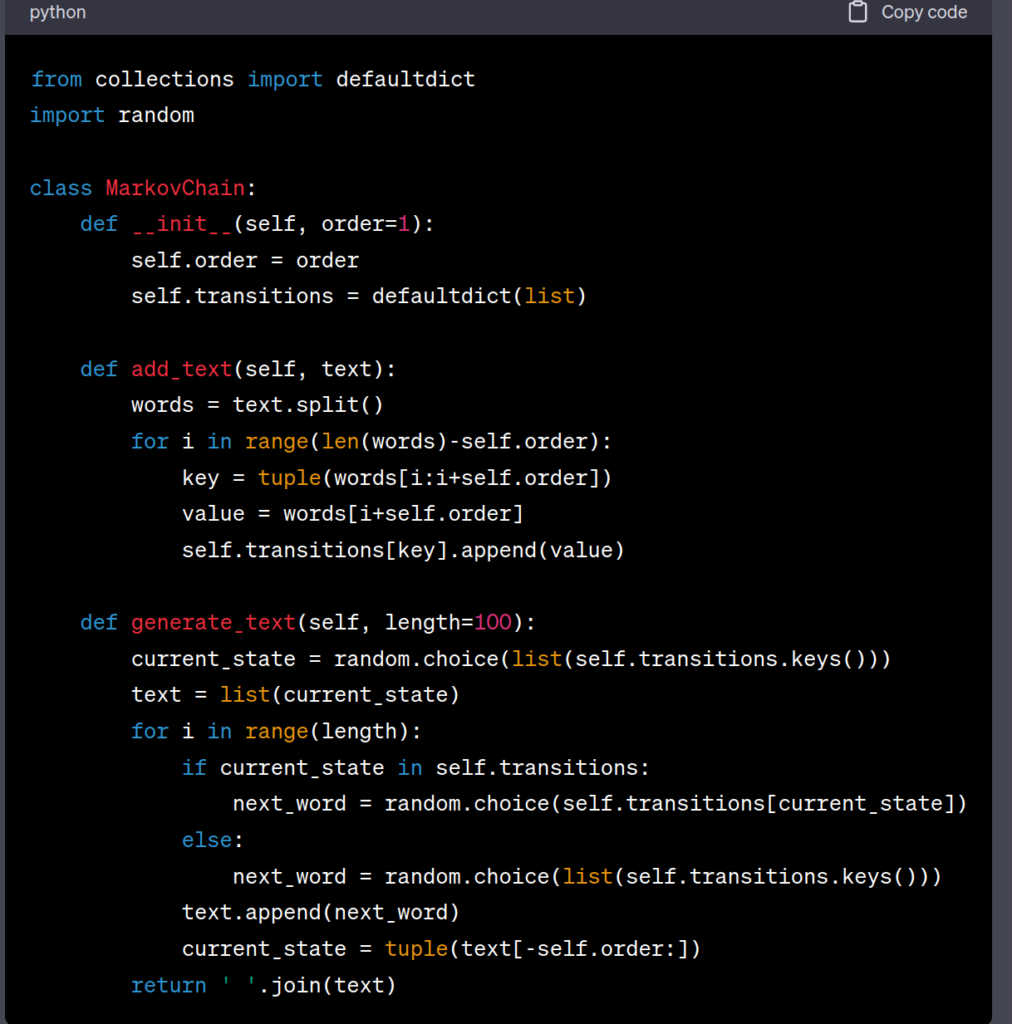

We can further optimise it for larger text using the defaultdict

from collections import defaultdict

import random

class MarkovChain:

def __init__(self, order=1):

self.order = order

self.transitions = defaultdict(list)

def add_text(self, text):

words = text.split()

for i in range(len(words)-self.order):

key = tuple(words[i:i+self.order])

value = words[i+self.order]

self.transitions[key].append(value)

def generate_text(self, length=100):

current_state = random.choice(list(self.transitions.keys()))

text = list(current_state)

for i in range(length):

if current_state in self.transitions:

next_word = random.choice(self.transitions[current_state])

else:

next_word = random.choice(list(self.transitions.keys()))

text.append(next_word)

current_state = tuple(text[-self.order:])

return ' '.join(text)

In this implementation, a defaultdict is used to store the transitions, with the key being a tuple of words of length order and the value being a list of words that follow the key in the input text.

The add_text method takes a string input and splits it into words, then adds the transitions to the dictionary.

The generate_text method randomly chooses a starting state from the available keys in the dictionary, then generates text by randomly selecting a word from the list of possible values for the current state. If the current state is not in the dictionary, a new state is chosen at random from the available keys.

Using defaultdict simplifies the implementation by eliminating the need to check if a key exists in the dictionary before appending to its list value. This can provide performance improvements for larger inputs where there may be a large number of unique keys.

Text Generation using Machine Learning

Text generation is the process of using machine learning algorithms to generate new text based on a given input. An example is using GPT 2 to generate a text.

import openai

openai.api_key = "YOUR_API_KEY" # replace with your OpenAI API key

# define the prompt for text generation

prompt = "The quick brown fox"

# set the temperature and max_tokens parameters for text generation

temperature = 0.7

max_tokens = 100

# use the GPT-2 model to generate text based on the prompt

response = openai.Completion.create(

engine="davinci", # replace with the name of the GPT-2 model you want to use

prompt=prompt,

temperature=temperature,

max_tokens=max_tokens,

)

# extract the generated text from the response object

generated_text = response.choices[0].text

# print the generated text

print(generated_text)Text Generation Using Transformers

We can also use Transformer based models for text generation using Tensorflow and PyTorch via the steps below

- Import the necessary libraries and modules

- Define the hyperparameters for the model

- Define the input and output sequences

- Define the encoder and decoder layers

- Define the transformer model

- Define the loss function and optimizer

- Train the model

- Generate new text using the trained model

import numpy as np

import tensorflow as tf

# Define hyperparameters

max_seq_length = 50

batch_size = 32

embedding_dim = 128

num_heads = 4

dff = 512

num_layers = 4

dropout_rate = 0.1

vocab_size = 10000

epochs = 10

# Define input and output sequences

inputs = tf.keras.layers.Input(shape=(max_seq_length,))

targets = tf.keras.layers.Input(shape=(max_seq_length,))

# Define encoder layer

encoder = tf.keras.layers.Embedding(vocab_size, embedding_dim)(inputs)

encoder = tf.keras.layers.Dropout(dropout_rate)(encoder)

for i in range(num_layers):

encoder = tf.keras.layers.MultiHeadAttention(num_heads, dff)(encoder, encoder)

encoder = tf.keras.layers.Dropout(dropout_rate)(encoder)

encoder = tf.keras.layers.LayerNormalization(epsilon=1e-6)(encoder)

# Define decoder layer

decoder = tf.keras.layers.Embedding(vocab_size, embedding_dim)(targets)

decoder = tf.keras.layers.Dropout(dropout_rate)(decoder)

for i in range(num_layers):

decoder = tf.keras.layers.MultiHeadAttention(num_heads, dff)(decoder, decoder)

decoder = tf.keras.layers.Dropout(dropout_rate)(decoder)

decoder = tf.keras.layers.LayerNormalization(epsilon=1e-6)(decoder)

decoder = tf.keras.layers.MultiHeadAttention(num_heads, dff)(decoder, encoder)

decoder = tf.keras.layers.Dropout(dropout_rate)(decoder)

decoder = tf.keras.layers.LayerNormalization(epsilon=1e-6)(decoder)

# Define output layer

outputs = tf.keras.layers.Dense(vocab_size, activation='softmax')(decoder)

# Define the model

model = tf.keras.models.Model(inputs=[inputs, targets], outputs=outputs)

# Define the loss function and optimizer

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

optimizer = tf.keras.optimizers.Adam()

# Compile the model

model.compile(optimizer=optimizer, loss=loss)

# Train the model

model.fit(dataset, epochs=epochs)

# Generate new text using the trained model

def generate_text(model, start_string):

# Tokenize the input string

input_eval = tokenizer.texts_to_sequences([start_string])

input_eval = tf.keras.preprocessing.sequence.pad_sequences(input_eval, maxlen=max_seq_length, padding='post')

# Initialize the output string

output_string = start_string

# Generate new text word by word

for i in range(max_seq_length):

# Generate the predictions

predictions = model.predict([input_eval, input_eval])

# Get the predicted next word index

predicted_index = np.argmax(predictions[:, i, :])

# Convert the index to its corresponding word

predicted_word = tokenizer.index_word[predicted_index]

# Add the predicted word to the output string

output_string += ' ' + predicted_word

# Update the input sequence

input_eval[0, i+1] = predicted_index

return output_stringText Generation Using PyTorch Transformer

import torch

import torch.nn as nn

import torch.optim as optim

from torchtext.datasets import Multi30k

from torchtext.data import Field, BucketIterator

class TransformerModel(nn.Module):

def __init__(self, input_dim, output_dim, hidden_dim, n_layers, n_heads, pf_dim, dropout):

super().__init__()

self.embedding = nn.Embedding(input_dim, hidden_dim)

self.pos_embedding = nn.Embedding(1000, hidden_dim)

self.encoder_layer = nn.TransformerEncoderLayer(hidden_dim, n_heads, pf_dim, dropout)

self.encoder = nn.TransformerEncoder(self.encoder_layer, n_layers)

self.fc_out = nn.Linear(hidden_dim, output_dim)

self.dropout = nn.Dropout(dropout)

self.scale = torch.sqrt(torch.FloatTensor([hidden_dim])).to(device)

def forward(self, src, src_mask):

# src = [src len, batch size]

# src_mask = [src len, src len]

src = self.dropout((self.embedding(src) * self.scale) + self.pos_embedding(torch.arange(0, src.shape[0]).unsqueeze(1).to(device)))

# src = [src len, batch size, hid dim]

src = self.encoder(src, src_key_padding_mask=src_mask)

# src = [src len, batch size, hid dim]

output = self.fc_out(src[-1, :, :])

# output = [batch size, out dim]

return output

There are many other ways to generate text in Python apart from Markov chains and GPT. These other examples include:

- Recurrent Neural Networks (RNNs) – RNNs are a type of neural network that can be used for sequence prediction and generation, including text generation.

- Long Short-Term Memory (LSTM) Networks – LSTMs are a type of RNN that are particularly good at processing and generating sequences of data.

- Neural Machine Translation (NMT) – NMT models are used for machine translation tasks, but can also be used for text generation.

- Variational Autoencoders (VAEs) – VAEs are a type of generative model that can be used for text generation.

- Transformer-based Models – Transformer models, like GPT, are a type of neural network that uses attention mechanisms to generate text.

- Neural Dialogue Models – Dialogue models are designed specifically for generating conversational text, and can be based on RNNs or transformer models.

There are many other techniques for text generation in Python, and the choice of which one to use depends on the specific task and requirements of the project

We have seen how to generate text using several methods in Python. Thank you for your attention

Jesus Saves

By Jesse E.Agbe(JCharis)