In a previous post, we learnt about the various ways we can productionize our machine learning models.

In this tutorial we will be looking at how to serve our machine learning models as an API- which is one of the ways to productionize your ML model.

There are several ways we can achieve this. We can use Flask, Bottle,Django etc. But in this tutorial we will be looking at another framework called FastAPI.

FastAPI is a high performance simple framework for building APIs(Application Programming Interface).

It combines the best of all the well known frameworks in Python as well as Javascript.

Getting Started

To work with fastapi we will have to install the following packages

pip install fastapi

or

pip install fastapi[all]

FastAPI requires an ASGI server to run hence we will be installing uvicorn or hypercorn.

pip install uvicorn

Building A Basic App

Just like flask we will be using decorators and almost the same guide in building our app.

Our app can be run in two ways. The second is mostly recommended since it offers the choice of reloading automatically.

python app.py

or

uvicorn app:app --reload

You can then navigate to the localhost to see your app in action.

http://127.0.0.1/

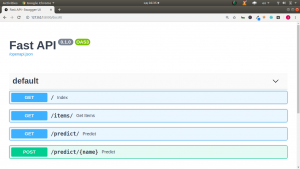

With FastAPI,you get two documentations with just a simple code. Adding either docs or redoc to the endpoint /url will result in two different and additional documentations .

https://127.0.0.1/docs

This yields the OpenAPI Swagger UI.

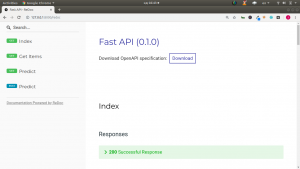

https://127.0.0.1/redoc

This uses the Redoc UI with some documentations out of the box.

Embedding ML into FastAPI/Serving ML Models as API

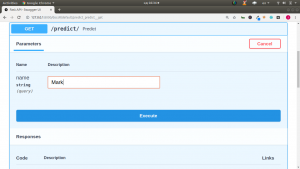

In utilizing our model as an API we will have to have a structure of what we want to predict. This will determine how we will set our endpoint and resources.

In our case we will be having only one parameter to use as a text for our prediction,the first name.

We will be using the gender classification of first names. We will pass our name to our endpoint following the normal API convention.

The basic workflow includes

- Import Packages

- Create Our Route (/predict)

- Load our Models and Vectorizer

- Receive Input From Endpoint

- Vectorize the data/name

- Make our predictions

- Send Result as JSON

The overall code for our app will be as follows

# Core Pkg

import uvicorn

from fastapi import FastAPI,Query

# ML Aspect

import joblib

# Vectorizer

gender_vectorizer = open("models/gender_vectorizer.pkl","rb")

gender_cv = joblib.load(gender_vectorizer)

# Models

gender_nv_model = open("models/gender_nv_model.pkl","rb")

gender_clf = joblib.load(gender_nv_model)

# init app

app = FastAPI()

# Routes

@app.get('/')

async def index():

return {"text":"Hello API Masters"}

@app.get('/items/')

async def get_items(name:str = Query(None,min_length=2,max_length=7)):

return {"name":name}

# ML Aspect

@app.get('/predict/')

async def predict(name:str = Query(None,min_length=2,max_length=12)):

vectorized_name = gender_cv.transform([name]).toarray()

prediction = gender_clf.predict(vectorized_name)

if prediction[0] == 0:

result = "female"

else:

result = "male"

return {"orig_name":name,"prediction":result}

# Using Post

@app.post('/predict/{name}')

async def predict(name):

vectorized_name = gender_cv.transform([name]).toarray()

prediction = gender_clf.predict(vectorized_name)

if prediction[0] == 0:

result = "female"

else:

result = "male"

return {"orig_name":name,"prediction":result}

if __name__ == '__main__':

uvicorn.run(app,host="127.0.0.1",port=8000)

In conclusion FastAPI makes it quite easier to create APIs with just a simple code.

For more on productionizing and building machine learning apps, you can check out this Mega course. or the Udemy Course.

Below is the video tutorial of the entire process.

Thanks For Your Time

Jesus Saves

By Jesse E.Agbe(JCharis)

Hello,

where is gender_nv_model.pkl and .. files? 🙂

Thanks

Hello Z

You can get the files here https://github.com/Jcharis/Machine-Learning-Web-Apps/tree/master/Gender-Classifier-ML-App-with-Flask%20%2B%20Bootstrap

Hope it helps