Every software requires a processing unit in order for it to run, however the kind of processing unit and circuitry among other things has a great influence on the performance and speed of the software. Large Language Models are also a form of software and they require the right infrastructure for better speed of inference.

In this post, we will explore the different types of processing units and microprocessor required for running traditional software to modern software augmented with LLM.

You have to remember that LLM( language models) are not just sequential predictors of language but also a whole network and collection of the way humans think, since our language is a reflection of our thoughts. Hence Language Models can also be called Thought Models as they model our thinking patterns.

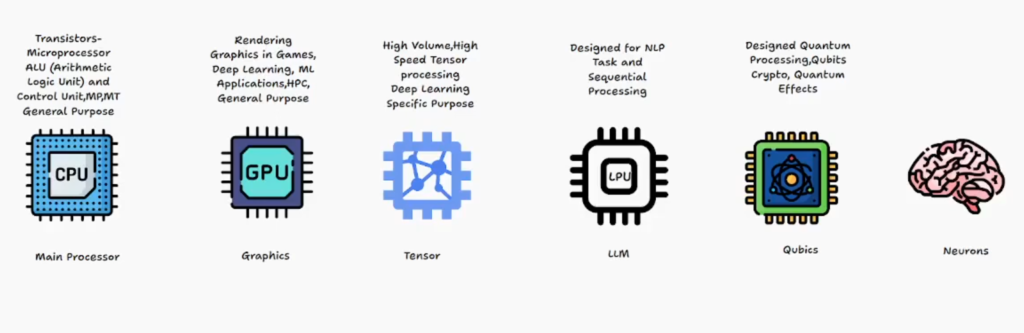

Types of Processing Units

The earliest form of circuitry for computing was the transistors, from which we progress to microprocessor such as CPU. Below is the list of Processing Units

- CPU: Central Processing Unit

- GPU: Graphics Processing Unit

- TPU: Tensor Processing Unit

- LPU: Language Processing Unit

- QPU: Quantum Processor

- DPU: Data Processing Unit

Let us see the difference between each and their use case

Here is a comparison table of CPU, GPU, TPU, and Quantum Processor, along with their typical use cases:

| Processor Type | Description | Use Cases |

|---|---|---|

| CPU (Central Processing Unit) | A general-purpose processor designed for single-threaded tasks. CPUs have a small number of cores with high clock speeds and large cache sizes. | General-purpose computing, single-threaded tasks, such as web browsing, word processing, and light gaming. |

| GPU (Graphics Processing Unit) | A processor designed for parallel processing of large data sets, typically used for graphics rendering. GPUs have a large number of cores with lower clock speeds and smaller cache sizes. | Graphics rendering, scientific simulations, machine learning, and data analytics. |

| TPU (Tensor Processing Unit) | A custom-built processor designed specifically for machine learning tasks. TPUs are optimized for tensor operations and have high throughput and low latency. | Machine learning inference and training, neural network computations, and large-scale data processing. |

| Quantum Processor | A processor that uses the principles of quantum mechanics to perform computations. Quantum processors have the potential to solve certain types of problems much faster than classical processors. | Quantum simulations, optimization problems, and cryptography. |

Note that while CPUs, GPUs, and TPUs are all digital processors that use classical computing principles, quantum processors use quantum mechanics to perform computations. Quantum processors are still in the early stages of development and are not yet as powerful as classical processors for most tasks. However, they have the potential to solve certain types of problems much faster than classical processors, making them a promising area of research.

A table summarizing the main differences between CPU, GPU, TPU, and Quantum Processor:

| Feature | CPU (Central Processing Unit) | GPU (Graphics Processing Unit) | TPU (Tensor Processing Unit) | Quantum Processor |

|---|---|---|---|---|

| Purpose | General-purpose processing | Graphics rendering and compute | Machine learning and deep learning | Quantum computing |

| Architecture | Serial processing, von Neumann model | Parallel processing, SIMD model | Parallel processing, SIMD model | Quantum-mechanical processing, quantum bits (qubits) |

| Performance | High clock speed, low latency | High memory bandwidth, high floating-point performance | High Tensor Cores, high memory bandwidth | High quantum parallelism, low error rate |

| Power Consumption | Moderate | High | High | Very low |

| Programming Model | Imperative, procedural | Imperative, procedural | Imperative, procedural | Quantum, functional |

| Programming Languages | C, C++, Java, Python, etc. | C++, C#, Python, etc. | TensorFlow, PyTorch, etc. | Q# (by Microsoft), Qiskit (by IBM), etc. |

| Memory Hierarchy | Multi-level hierarchy (cache, main memory, storage) | Limited cache, GDDR (Graphics Double Data Rate) memory | Tensor Cores, cache, main memory | Quantum registers, cache |

| Data Types | Binary (0/1) | Binary (0/1) | Binary (0/1) | Quantum (0/1, superposition, entanglement) |

| Computational Operations | Arithmetic, logical, memory access | Matrix multiplication, convolution, pooling | Matrix multiplication, convolution, pooling | Quantum teleportation, superposition, entanglement |

| Applications | Web servers, databases, productivity software | Computer-aided design, video games, scientific simulations | Machine learning, deep learning, natural language processing | Quantum cryptography, quantum simulation, quantum optimization |

The table above is a simplified summary However, it should give you a rough idea of the main differences between these types of processors.

For a video description, you can check out this vlog

You can also check out the video tutorial below on how to use Groq – a LPU service for working with Large language models

Thank you for your attention

Jesus Saves

By Jesse E.Agbe(JCharis)